Available PhD Projects

ExaGEO equips students with the skills, knowledge, and principles of exascale computing — drawing from geoscience, computer science, statistics, and computational engineering — to tackle some of the most pressing challenges in Earth and environmental sciences and computational research. Students will work under expert supervision in the below fields:

- Atmosphere, hydrosphere, cryosphere, and ecosystem processes and evolution

- Geodynamics, geoscience and environmental change

- Geologic hazard analysis, prediction and digital twinning

- Sustainability solutions in engineering, environmental, and social sciences

Each student will be positioned within a supervisory team consisting of multidisciplinary supervisors; one computational, one domain expert, and one from an Earth or environmental, and/or social science research background. This ‘team-based’ supervisory approach is designed to enhance multidisciplinary training.

Please note that some projects may have incomplete supervisory teams, however the full teams will be finalised before the start of the PhD.

Project Selection and Information

- You must apply for three projects. Each project has two project variations, i.e., teaser projects. During your first year, after working on both teaser projects (under the same supervisory team), you will select the project that best aligns with your interests. For further information on how this process will work, please see the FAQs section on our Apply page.

- Your PhD institution will be determined by the Principal Supervisor’s institutional affiliation.

- You can apply for projects at different institutions.

- Projects are grouped by research field.

- If you have any queries regarding a specific project, please contact the supervisor listed first (this will be the Principal Supervisor).

- Projects are funded via ExaGEO; this includes fees, stipends and a Research Training Support Grant. For further information, please see our Apply page.

Projects with a focus on Atmosphere, Hydrosphere, Cryosphere, and Ecosystem Processes and Evolution:

-

A Dangerous Duo: Exploring the Impact of Heatwaves on Air Pollution

Project institution:Project supervisor(s):Prof Ryan Hossaini (Lancaster University), Dr Andrea Mazzeo (Lancaster University), Mr Michael Thomas (Reliable Insights), Dr Emma Eastoe (Lancaster University), Dr James Keeble (Lancaster University) and Dr Helen Macintyre (UK Health Security Agency)Overview and Background

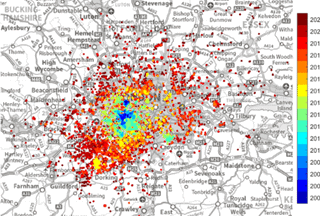

Heatwaves (i.e. sustained periods of exceptionally hot weather) are a well-recognised public health hazard. Strong evidence shows that the co-occurrence of extreme air pollution events during heatwaves amplifies health risks [1,2]. The 2022 European heatwave, when the UK recorded its first ever temperature >40°C, was accompanied by a widespread deterioration in air quality, with air pollutants at ground level exceeding safe limits across much of the continent3. Causal relationships between extreme temperature and air pollution are complex, involving weather patterns that promote air stagnation, pollutant emissions (e.g. from wildfires) and atmospheric photochemistry [4, 5]. These factors are not yet well understood but are important to detangle as the frequency and intensity of summer heatwaves is expected to rise due to climate change [6].

This project’s overarching goals are to (1) characterize the response of air pollutants to heatwaves across Europe and to assess their combined health impacts, (2) provide new process-level insight into the causal relationship between extreme temperature and air pollution, and (3) evaluate and improve current systems for forecasting extreme air pollution events. This will be achieved through ultra-high-resolution air quality model simulations, analysis of air pollutant measurement data, and by exploring data-driven approaches to air quality forecasting and inference, including machine learning.

The successful candidate will join LEC’s vibrant atmospheric science research group (AtMOS) and benefit from a diverse supervisory team. This includes a placement with a UK industry partner (Reliable Insights Ltd www.reliable-insights.com / https://www.tangentworks.co.uk/) and partnership with the UK Health Security Agency.

Methodology and Objectives

Teaser Project 1: What drives adverse air quality during heatwaves?

In Year 1, the goal will be to characterise the observed response of ground-level ozone (an important air pollutant) during European heatwaves. Using the severe summer 2022 heatwave as a case study, an analysis of surface temperature and ozone measurements from hundreds of sites across Europe will be performed (e.g. exploiting the extensive TOAR-II measurement database). Output from the UCI chemical transport model (CTM), a global model developed and maintained by the Lancaster atmospheric science group, will also be analysed. The ability of the model to simulate the behaviour of ozone during the heatwave, including the observed ozone-temperature relationship, will be determined. This work will provide a strong grounding in the observational datasets and atmospheric model used in subsequent years.In Year 2 emphasis will be placed on understanding the various processes (meteorological, chemical, physical) responsible for elevating ozone during heatwaves. This will be achieved through carefully designed model sensitivity experiments that allow these factors to be detangled and quantified. One enquiry will be to assess the importance of temperature-dependent ozone precursor emissions, such as emissions from wildfires (CO, NOx) and volatile organic compound emissions from stressed vegetation. Another will be to assess long-range transport of ozone into continental Europe from other world regions. As the project progresses in years 2 and 3, the scope will expand to consider other notable heatwave years and air pollutants. The ability of regional scale models to forecast extreme air pollutant events will be explored, as will innovative data-driven approaches (e.g. machine learning).

Teaser Project 1 Objectives:

- Characterize the ozone-heatwave response across multiple European summers using surface and satellite measurements.

- Assess the ability of atmospheric models to capture extreme ozone events and the observed ozone-temperature relationship.

- Interpret the observed ozone-heatwave responses using high resolution model simulations and assess the roles of meteorological, chemical and physical factors.

- Explore data-driven approaches to air quality forecasting.

Teaser Project 2: Optimising air quality forecasts: exploring data-driven methods

Process-based air quality models are frequently used to simulate past (i.e. ‘hindcast’) air quality. This provides information needed to quantify how air pollutant levels have changed over time (e.g. due to policy interventions) and the implications for human exposure and health. Such models are also increasingly used to alert the public in advance of upcoming air pollution episodes (i.e. ‘forecast’). Like weather forecasts, air quality forecasts are provided up to several days ahead, though confidence generally decreases as the forecast range increases. As the skill of air quality models is often inadequate, particularly for the most ‘extreme’ episodes, various approaches to ‘bias correct’ model forecasts (before issued) have emerged [7, 8].

In Year 1, the goal will be to examine the ability of WRF-Chem to simulate UK air quality in hindcast mode, with an emphasis on quantifying the model’s skill during recent heatwaves. WRF-Chem is a well-evaluated and widely adopted process model suitable for national-scale high resolution simulations. The model’s skill in simulating extreme air pollutant levels will be assessed using the UK’s extensive network of air pollutant measurements and by considering a range of key performance metrics (e.g. hit rate, false alarm rate etc.). This will provide a solid grounding on the strengths and weaknesses of air quality models and approaches to evaluate them.

In Year 2, the effectiveness of a range of bias correction techniques applied to the WRF-chem simulations will be explored, including machine learning based approaches [9]. Methods that improve the tail of the ozone distribution (e.g. ‘quantile mapping’) will be examined. Bias-corrected hindcasts will be produced and the annual mortality burden attributable to long-term air pollutant exposure, along with the health impacts of elevated air pollution in conjunction with heatwaves, will be quantified [10].

In Year 3, the focus of the project will be to explore how data science approaches can improve the skill of air quality forecasts across a range of forecast lead times (24 to 96 hours). Important predictor variables for adverse UK air quality will be assessed and ranked, including the potential for their near real-time assimilation (e.g. surface and satellite data). These data will be used to train machine learning models and the resulting data-driven forecasts will be evaluated against process models. A key question will be whether a data-driven approach outperforms a traditional process model forecast and if so under what settings. Whether the two may be combined to produce optimal results will also be examined.

The development of data-driven solution to air quality forecasting is a rapidly evolving field with very strong opportunity for impactful science. During year 3, a placement with Reliable Insights (a leading Lancaster based time-series data specialist) will be undertaken. This placement will provide an excellent opportunity to develop industry links, to implement

Teaser Project 2 Objectives:

- Evaluate the skill of the WRF-Chem model in simulating air pollutants during recent UK heatwaves employing a range of metrics.

- Test and apply bias correction techniques to produce optimised WRF-Chem hindcasts and use these to quantify the human health effects of UK air pollution over time.

- Explore a data-driven air quality forecasting system for the UK, exploiting machine learning and other innovative data science approaches.

References & Further Reading

[1] Schnell, J.L., and Prather, M.J. (2017). Co-occurrence of extremes in surface ozone, particulate matter, and temperature over eastern North America. Proc. Natl. Acad. Sci., 114, 2854-2859, https://doi.org/10.1073/pnas.1614453114

[2] Gouldsbrough, L., Hossaini, R., Eastoe, E., & Young, P.J.Y. (2022). A temperature-dependent extreme value analysis of UK surface ozone, 1980-2019. Atmos. Env., 273, 118975.

[3] https://atmosphere.copernicus.eu/copernicus-scientists-warn-very-high-ozone-pollution-heatwave-continues-across-europe

[4] Pope, R. J., et al. (2023). Investigation of the summer 2018 European ozone air pollution episodes using novel satellite data and modelling, Atmos. Chem. Phys., 23, 13235-13253, https://doi.org/10.5194/acp-23-13235-2023.

[5] Otero, N., Jurado, O. E., Butler, T., and Rust, H. W. (2022). The impact of atmospheric blocking on the compounding effect of ozone pollution and temperature: a copula-based approach, Atmos. Chem. Phys., 22, 1905-1919, https://doi.org/10.5194/acp-22-1905-2022.

[6] Doherty, R.M., Heal, M.R., and O’Connor, F.M. Climate change impacts on human health over Europe through its effect on air quality, Environ. Health, 16, https://doi.org/10.1186/s12940-017-0325-2.

[7] Staehle, C., et al. (2024). Technical note: An assessment of the performance of statistical bias correction techniques for global chemistry–climate model surface ozone fields, Atmos. Chem. Phys., 24, 5953-5969, https://doi.org/10.5194/acp-24-5953-2024.

[8] Neal, L.S., Agnew, P., Moseley, S., Ordóñez, C., Savage, N.H. and Tilbee, M. (2014). Application of a statistical post-processing technique to a gridded, operational, air quality forecast, Atmos. Env., 98, 385-393, https://doi.org/10.1016/j.atmosenv.2014.09.004.

[9] Gouldsbrough, L., Hossaini, R., Eastoe, E., Young, P.J.Y. & Vieno, M. (2023). A machine learning approach to downscale EMEP4UK: analysis of UK ozone variability and trends. Atmos. Chem. Phys., 24, 3163-3196, https://doi.org/10.5194/acp-24-3163-2024.

[10] Macintyre, H.L., et al. (2023). Impacts of emissions policies on future UK mortality burdens associated with air pollution. Environ. Int., 174, 107862, https://doi.org/10.1016/j.envint.2023.107862. -

Antarctic Ice Loss in High Definition: Analysing novel high-resolution satellite data streams for quantifying 21st century change

Project institution:Project supervisor(s):Prof Mal McMillan (Lancaster University), Dr Dave McKay (University of Edinburgh), Dr Jenny Maddalena (Lancaster University) and Dr Israel Martinez Hernandez (Lancaster University)Overview and Background

This project offers the exciting opportunity to be at the forefront of research to exploit the potential of exascale computing, for the purposes of satellite monitoring of Earth’s polar ice sheets, at scale.

This project offers the exciting opportunity to be at the forefront of research to exploit the potential of exascale computing, for the purposes of satellite monitoring of Earth’s polar ice sheets, at scale. The polar regions are one of the most rapidly warming regions on Earth, with ongoing melting of ice sheets and ice caps making a significant contribution to global sea level rise. As Earth’s climate continues to warm throughout the 21st Century, ice melt is expected to accelerate, leading to large-scale social and economic disruption.

Satellites provide a unique tool for monitoring the impact of climate change upon the polar regions, and are key to tracking the ongoing contribution that ice masses make to sea level rise. With recent increases in data volumes, computing power and the use of data science, comes huge potential to rapidly advance our ability to monitor and predict changes across this vast and inaccessible region. However, currently this potential is not fully realized.

This project will place you at the forefront of this research, working to advance our current capabilities towards exascale computing, through a combination of state-of-the-art satellite datasets, high performance compute, and innovative data science methods. You will be supported by a multidisciplinary supervisory team of statisticians, computer scientists and environmental scientists, with opportunities to contribute to projects run by the European Space Agency. Specifically, this project aims to develop new large-scale, high-resolution estimates of 21st century Antarctic ice loss and, in doing so, better constrain our estimates of past and future sea level rise.

Methodology and Objectives

Project Aim: This project aims to utilize new streams of satellite data, alongside advanced statistical algorithms and compute, to transform our ability to monitor Antarctic Ice Sheet mass loss at high spatial resolution and at the pan-Antarctic scale. More specifically, the successful candidate will develop new estimates of ice sheet mass loss using high-volume, high-resolution satellite-derived Digital Elevation Models (DEM’s), using efficient GPU-enabled processing flows. These will be used to determine unique, large-scale estimates of 21st century ice sheet mass loss and glacier evolution.

Methods Used:

This project will build upon recent proof-of-concept work that has developed a novel pipeline for processing high volume (100’s Tb), extremely high (meter scale) resolution timeseries of Digital Elevation Models. The focus of this PhD will be to adapt these methods so that – for the first time – it is computationally feasible to apply them at the ice sheet scale, and then to develop a comprehensive, ice sheet wide assessment, which will ultimately improve our understanding of the impact of climate change on polar ice loss and sea level rise. This will necessitate the use of Graphical Processing Units (GPU’s) on High Performance Computing (HPC) clusters. As such, developing the code to work on this high-level computing architecture will be a key element of the project.

Within the first year of the PhD, the successful candidate will have the opportunity to explore 2 teaser projects, one of which will then be taken forward into subsequent years.

Teaser Project 1: Towards pan-Antarctic, high-resolution monitoring of ice loss

This teaser project will work to translate the current proof of concept DEM pipeline into a system which can be run efficiently at scale, and then to test its use at a number of key Antarctic study sites. Specifically, state-of-the-art satellite altimetry will be combined with high-resolution DEM’s from the Reference Elevation Model of Antarctica (REMA) project, to generate estimates of glacier elevation change covering the period 2010-present. Study sites will be selected to cover those of high scientific interest (e.g. Pine Island Glacier, Totten Glacier), and to explore performance within diverse glaciological settings (e.g. large ice streams, narrow outlet glaciers with nearby nunataks). A key component of this initial project will be to refactor the current code on GPU-enabled systems, alongside developing better approaches to memory management, data infrastructure etc. If continued beyond year 1, the ultimate ambition of this teaser project will be to exploit the full, pan-Antarctica archive of REMA strips, to (1) generate new ultra high-resolution Antarctic Mass Balance estimates, and (2) improve process understanding of the physical drivers of current ice loss.

Teaser Project 2: Multi-sensor integration for multi-decadal monitoring

The second teaser project will again aim to establish efficient, scalable DEM-processing pipelines for resolving Antarctic Ice Sheet mass loss, but this time focusing on extending the observational record to generate timeseries spanning a quarter of a century (2000-2025). This necessitates adapting the proof-of-concept pipeline which forms the basis of teaser project 1, to add functionality to process data from the ASTER mission (2000-present) over Antarctica; thus enabling a long-term record to be derived. Initial work has already been performed to process ASTER data over Greenland using conventional (CPU) systems. Hence the purpose of this teaser project will be (1) to adapt this existing code so that it runs over Antarctica, and (2) refactor it for deployment on GPU’s, with a view to future scale-up. If continued beyond year 1, the ultimate ambition of this teaser project would be to (1) generate new long-term records of Antarctic glacier evolution, and (2) improve process understanding of the physical drivers of current ice loss.

References & Further Reading

Here are some tasters of our work and its impact:

https://www.weforum.org/agenda/2019/05/antarctica-s-ice-is-melting-5-times-faster-than-in-the-90s/

https://www.bbc.co.uk/news/science-environment-47461199

The candidate will also join the UK Centre for Polar Observation and Modelling, and the Centre of Excellence in Environmental Data Science:

-

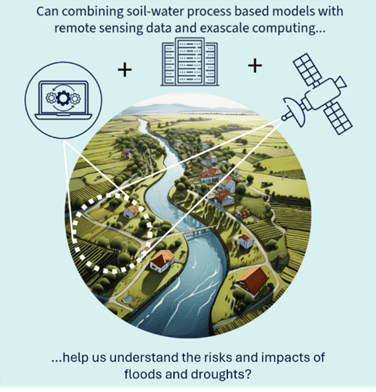

Computationally scalable data fusion for real-time water quantity and quality forecasting

Project institution:Project supervisor(s):Dr Abdollah Jalilian (University of Glasgow), Dr Faiza Samreen (UKCEH), Dr Andrew Elliott (University of Glasgow), Prof Claire Miller (University of Glasgow), Prof Andrew Tyler (University of Stirling) and Prof Peter Hunter (University of Stirling)Overview and Background

This project will develop machine learning and statistical methods for real-time forecasting via data fusion with uncertainty quantification for water catchments. It will use and develop advanced AI models (building on and expanding the work such as Allen, et al., 2025 and relevant foundation models) to fuse in situ sensors and satellite data (optical and/or radar) for hydrology and surface water quality in river catchments. The project will also assess the generalisability of developed methods across multiple river catchments.

Data demands can be very large with, for example, data collected approximately every 15 minutes from multiple in situ sensors and 10m resolution for satellite. To obtain fast (real-time or near real-time), reliable and computationally efficient (and therefore environmentally friendly) models, this project specifically targets the development of GPU programming for scalable analytics and will consider the advantages of cloud GPUs and other related platforms, for example EDITO, to support transferability and impact.

This project is a collaboration with domain expert colleagues at University of Stirling and Scottish Water and will link to NERC projects such as SenseH20 and MOT4Rivers and the Forth-ERA digital observatory of the Forth Catchment.

Methodology and Objectives

The AI-based models will be developed using the latest generation of deep learning approaches (such as transformer models, physics–informed losses, etc). As detailed in the background, this project would leverage existing model frameworks (like Aardvark), foundation models that can be specialised, and construct domain–specific pipelines as appropriate. The fusion methodologies will be tested on a number of downstream tasks, most notably predicting values of water quantity/quality far from the sensor locations (validated by cross validation) and forecasting. While the frameworks generated will be tested on the dataset in question, the assumption would be that the models can be transferred to other localities, and testing the portability of these approaches will be part of the later stages of this project.

Teaser Project 1:

The first teaser project will focus on developing an initial methodology for data fusion, based on taking an off-the-shelf deep learning approach, and applying it to the dataset in question to explore the performance. This will be compared with standard statistical approaches (e.g. Kriging and hierarchical Bayesian spatiotemporal models) to understand the relative advantages and disadvantages of this approach both in accuracy of prediction and computational time. Based on these initial findings and limitations of the approach, we will consider additional changes to the architecture including software optimisations (including GPU programming) or indeed the development of a different approach which will naturally extend into a full PhD project should the student decide to pursue this.

Teaser Project 2:

The second teaser project is strongly rooted in uncertainty quantification, i.e. understanding how certain we should be about the model’s predictions. AI-based approaches while regularly delivering high accuracy often lack the strong probabilistic frameworks to give good uncertainty quantification. To do this, we will employ a mixture of approaches from Monte Carlo based and variational inference approaches leveraging the fast inference time of AI models, to emulation-based approaches. Given the data fusion-based pipelines we will be developing this will require understanding both the uncertainty induced by the model and the uncertainty in the observations themselves. Computationally this is quite intensive, and therefore part of this teaser project will be understanding this complexity and optimising it, both through computational means (i.e. GPU coding, HPC etc etc) and through statistical techniques to most efficiently use computational resources (and limit their environmental impact).

References & Further Reading

Allen, A., Markou, S., Tebbutt, W., Requeima, J., Bruinsma, W. P., Andersson, T. R., … & Turner, R. E. (2025). End-to-end data-driven weather prediction. Nature, 641(8065), 1172-1179. 10.1038/s41586-025-08897-0

Andersson, T. R. et al. (2021) Seasonal Arctic sea ice forecasting with probabilistic deep learning. Nature Communications, 12, 5124. (doi: 10.1038/s41467-021-25257-4) (PMID:34446701) (PMCID:PMC8390499)

Colombo, P., Miller, C., Yang, X., O’Donnell, R., & Maranzano, P. (2025). Warped multifidelity Gaussian processes for data fusion of skewed environmental data. Journal of the Royal Statistical Society Series C: Applied Statistics, 74(3), 844-865. 10.1093/jrsssc/qlaf003

Wilkie, C. J., Miller, C. A., Scott, E. M., O’Donnell, R. A., Hunter, P. D., Spyrakos, E., & Tyler, A. N. (2019). Nonparametric statistical downscaling for the fusion of data of different spatiotemporal support. Environmetrics, 30(3), e2549. 10.1002/env.2549

-

Detecting hotspots of water pollution in complex constrained domains and networks

Project institution:Project supervisor(s):Dr Mu Niu (University of Glasgow), Dr Craig Wilkie (University of Glasgow), Prof Cathy Yi-Hsuan Chen (University of Glasgow) and Dr Michael Tso (UKCEH)Overview and Background

Technological developments with smart sensors are changing the way that the environment is monitored. Many such smart systems are under development, with small, energy efficient, mobile sensors being trialled. Such systems offer opportunities to change how we monitor the environment, but this requires additional statistical development in the optimisation of the location of the sensors.

The aim of this project is to develop a mathematical and computational inferential framework to identify the best locations to deploy sensors in a complex constrained domain and network, to enable improved detection of water contamination. The proposed method can also be applied to solve regression, classification and optimization problems in a latent manifold which embedded in higher dimensional spaces.

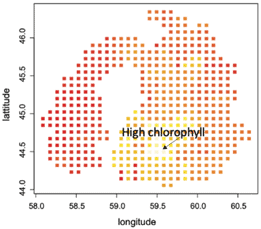

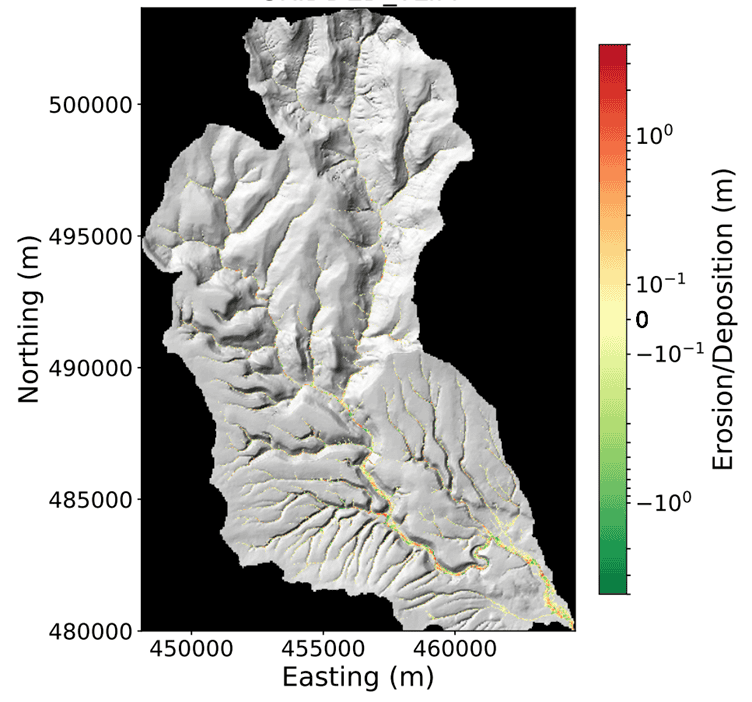

Figure 1, Examples of complex constrained domains: Chlorophyll concentrations in Aral Sea (Wood et al., 2008). Methodology and Objectives

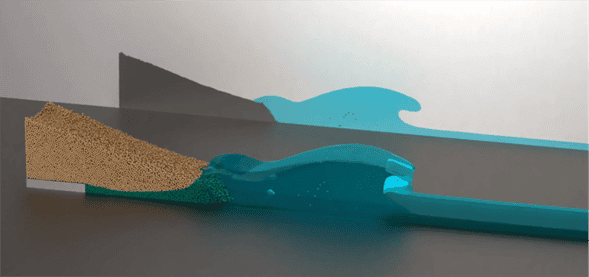

The idea of on-site sensors to detect water contaminants has a rich history. Since water flows at finite speeds, placing sensors strategically reduces time until detection. The mathematical analysis is often made difficult by the need to model the nonlinear dynamical systems of hydraulics within a non-Euclidean space such as constrained domains (lake or river, Wood et al., 2008) or networks (pipe network, Oluwaseye, et al., 2018). It requires solving large nonlinear systems of differential equations in the complex domain and is difficult to apply to even moderate-sized problems.

This proposed PhD project aims to develop new methods to improve environmental sampling, enabling improved estimation of water pollution and associated uncertainty that appropriately accounts for the geometry and topology of the water body.

Methods Used:

Intrinsic Bayesian Optimization (BO) on complex constrained domains and networks allows the use of the prediction and uncertainty quantification of intrinsic Gaussian processes (GPs) (Niu et al., 2019, 2023) to direct the search of the water pollution. Once new detection is observed, the search for a hotspot can be sequentially updated.

The key ingredients of BO are the Gaussian processes (GPs) prior that captures beliefs about the behaviour of the unknown black-box function in the complex domains. The student will develop intrinsic BO on non-Euclidean spaces such as complex constrained domains and networks with the state-of-the-art GPs on manifolds and GPs on graphs. Extending the idea of estimating covariance functions on manifolds, the project aims to estimate the heat kernel of the point cloud, allowing the incorporation of the intrinsic geometry of the data, and a potentially complex interior structure.

The application areas are water quality in lakes with complex domains (such as the Aral Sea) and pollution sources in a city’s sewage network. The methods would have the potential to inform about emergent water pollution events like algal blooms, providing an early warning system, and help to identify pollution sources.

Teaser Project 1 Objectives: In the first teaser project, the student will apply intrinsic GPs to water quality data, seeking to understand the complex patterns of water quality in non-Euclidean spaces (both continuous domains with complex boundaries and network domains). The student will apply existing methods to small-scale datasets, getting a feel of the methodology used in this area. This work could evolve into a PhD with a focus on developing computationally demanding methods for modelling water quality and detecting hotspots over complex domains. Parallelisation over GPUs would enable modelling across large areas, with high data volumes typical of high spatial resolution water quality data.

Teaser Project 2 Objectives: In the second teaser project, the student will expand their work to the spatio-temporal (or manifold-temporal) setting, incorporating both complex spatial and temporal structures to fully explain the changing nature of the water quality patterns. Again, this teaser project will use involve applying existing methods to small-scale datasets. Due to the high computational complexity of spatio-temporal models, this project has the potential to evolve into a PhD with a focus on developing highly computationally efficient methods, with a focus on parallelisation on GPUs.

The student will benefit from the extensive expertise of the supervisory team. Dr Niu specializes in statistical inference in Non-Euclidean spaces, with application in ecology and environmental science. Dr Wilkie has a background in developing spatiotemporal data fusion approaches for environmental data, focussing on satellite and in-lake water quality data. Prof Chen specializes in network modeling, statistical inference, data science, machine learning and economics. Dr Tso is an environmental data scientist with strong computational background and a portfolio of work on water quality monitoring, including adaptive sampling.

References & Further Reading

- Niu, et al., (2019), “Intrinsic Gaussian processes on complex constrained domain”,J. Roy Statist. Soc. Series B, Volume 81, Issue 3.

- Niu, et al., (2023): Intrinsic Gaussian processes on unknown manifold with probabilistic geometry, Journal of Machine Learning Research; 24 (104).

- Oluwaseye, et al.,(2018) A state-of-the-art review of an optimal sensor placement for contaminant warning system in a water distribution network, Urban Water Journal, 15:10, 985–1000.

- Giudicianni et al., (2020). Topological Placement of Quality Sensors in Water-Distribution Networks without the Recourse to Hydraulic Modeling. Journal of Water Resources Planning and Management, 146(6).

- Wood, S. N., Bravington, M. V. and Hedley, S. L. (2008) Soap film smoothing. J. Royal Stat. Soc. Series B, 70, 931–955.

-

Firn Futures: Examining Antarctic Ice Shelf Stability with GPU-Accelerated Firn Modelling

Project institution:Project supervisor(s):Dr Amber Leeson (Lancaster University), Dr Matt Speers (Lancaster University), Dr Katie Miles (Lancaster University) and Dr Vincent Verjans (Barcelona Supercomputing Centre)Overview and Background

Antarctic ice shelves regulate the discharge of grounded ice into the ocean, and their collapse can accelerate sea level rise (Berthier et al., 2012). The Larsen C Ice Shelf (LCIS) in particular is thought to be vulnerable to surface melt and hydrofracture, processes which may lead to eventual collapse and which are controlled by the firn layer’s ability to store and refreeze meltwater. Current firn models simplify these processes to reduce computational demand (e.g. Verjans et al., 2019), but this introduces uncertainty and risks misrepresenting thresholds for saturation and collapse. This project will exploit GPU acceleration to test and improve meltwater physics in the Community Firn Model (CFM, Stevens et al., 2020) against field and satellite observations and use the developed model to simulate the evolution of the firn layer on the LCIS under predicted climate warming. By resolving these processes at scale, the project will deliver new predictions of LCIS stability under future climate forcing.

Methodology and Objectives

This PhD project integrates novel field observations (Hubbard et al., 2016), satellite data, and firn modelling to address a key uncertainty in Antarctic climate science: when will the firn layer of the Larsen C Ice Shelf (LCIS) saturate, and what are the consequences for its stability? The programme begins with two exploratory projects of approximately six months each, both centred on the Community Firn Model (CFM). These projects will provide complementary experience in model physics and high-performance computation. Following this training year, the student will select one pathway to pursue in depth from Year 2 onwards, ultimately delivering a substantive contribution to predicting ice-shelf vulnerability.

Teaser Project 1: Advancing meltwater physics in the Community Firn Model

Firn modelling in high-melt environments is fundamentally limited by how liquid water processes are represented. Meltwater percolation, refreezing, and the possible development of firn aquifers are central to whether surface melt is buffered or contributes directly to destabilisation. Current CFM schemes necessarily simplify these processes, often assuming one-dimensional percolation and instantaneous refreezing, which can underestimate storage depth and persistence.This project will benchmark the CFM’s existing meltwater parameterisations against borehole-derived density profiles and refrozen ice layers sampled during field campaigns on LCIS, together with satellite observations of meltwater ponding (e.g. Corr et al., 2022) and surface elevation change. The student will then develop an inventory of physically more complete options, such as multi-phase flow and coupled thermodynamics which could be implemented within the CFM framework using GPU acceleration to reduce the computational penalty of these enhancements, enabling higher spatial and temporal resolution

Teaser Project 2: GPU-accelerated simulations of firn evolution under extreme melt

The second project develops expertise in scalable Earth system modelling by implementing the CFM on GPU-enabled high-performance clusters. Starting from borehole-constrained initial conditions, the model will be forced with outputs from regional climate models covering the past two decades. Particular emphasis will be placed on extreme melt events associated with atmospheric rivers, which have played a central role in past ice-shelf collapse (Wille et al., 2022).The student will design sensitivity experiments to explore firn response to a range of forcing scenarios, including anomalously warm summers, rainfall events, and multi-year accumulation variability. By exploiting GPU acceleration, simulations will be scaled to high spatial resolution across LCIS, and large ensembles will be run to explore uncertainty. These experiments will provide new insight into the thresholds governing firn saturation and the role of rare but intense events in destabilising ice shelves.

Beyond Year 1:

At the end of Year 1, the student will select a pathway for further development. If Project 1 is chosen, the PhD will concentrate on improving the physics of meltwater flow in the CFM and integrating field and satellite observations to constrain model predictions. If Project 2 is chosen, the emphasis will be on producing GPU-accelerated projections of firn evolution and collapse risk to 2100 under multiple climate scenarios and will include an assessment of uncertainty in predictions.Long-term objectives

Whichever pathway is selected, the overarching aims of the PhD are to:- Advance the representation of firn processes in high-melt Antarctic environments.

- Develop innovative methods for combining borehole and satellite data with physically based modelling.

- Exploit exascale computing to enable continent-scale, ensemble firn simulations with improved physics.

- Provide new projections of Antarctic ice shelf vulnerability, ultimately contributing to sea-level rise assessments

References & Further Reading

Berthier, E., Scambos, T. A., & Shuman, C. A. (2012). Mass loss of Larsen B tributary glaciers (AP) unabated since 2002. Geophysical Research Letters, 39, 6.

Corr, D., Leeson, A., McMillan, M., Zhang, C., and Barnes, T.: An inventory of supraglacial lakes and channels across the West Antarctic Ice Sheet, Earth Syst. Sci. Data, 14, 209–228, https://doi.org/10.5194/essd-14-209-2022, 2022.

Wille, J.D., Favier, V., Jourdain, N.C. et al. (2022) Intense atmospheric rivers can weaken ice shelf stability at the Antarctic Peninsula. Commun Earth Environ 3, 90. https://doi.org/10.1038/s43247-022-00422-9

Hubbard, B., Luckman, A., Ashmore, D. et al. Massive subsurface ice formed by refreezing of ice-shelf melt ponds. Nat Commun 7, 11897 (2016). https://doi.org/10.1038/ncomms11897

Stevens, C. M., Verjans, V., Lundin, J. M. D., Kahle, E. C., Horlings, A. N., Horlings, B. I., and Waddington, E. D.: The Community Firn Model (CFM) v1.0, Geosci. Model Dev., 13, 4355–4377, https://doi.org/10.5194/gmd-13-4355-2020, 2020.

Verjans, V., Leeson, A. A., Stevens, C. M., MacFerrin, M., Noël, B., and van den Broeke, M. R.: Development of physically based liquid water schemes for Greenland firn-densification models, The Cryosphere, 13, 1819–1842, https://doi.org/10.5194/tc-13-1819-2019, 2019.

-

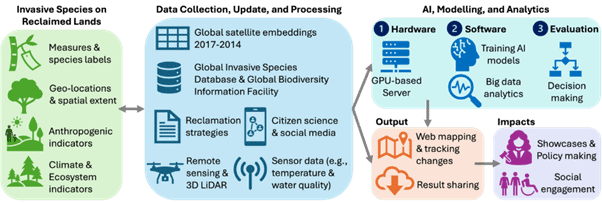

Forests in the Exascale Era: High-resolution Modelling of Global Biomass Drivers, Loss and Recovery

Project institution:Project supervisor(s):Dr Wenxin Zhang (University of Glasgow), Prof Peter Atkinson (Lancaster University), Dr Dave McKay (University of Edinburgh), Dr-Vasilis-Myrgiotis (UKCEH) and Dr Emma Robinson (UKCEH)Overview and Background

Forests carbon sinks and sources are central to estimating the global carbon budget, but quantifying their biomass dynamics—especially how losses and recoveries are associated with natural and anthropogenic drivers—is a major challenge. The recent dataset of global drivers of forest loss at 1–km resolution (2001–2022) classifies loss into: agriculture, logging, wildfire, infrastructure, and natural disturbances (with ~90.5 % accuracy), being a new, spatially explicit basis for attribution studies. However, pre-2001 biomass dynamics and drivers remain largely unexplored. By combining the drivers dataset with exascale-enabled simulations using JULES and Earth observation products (e.g. L-VOD, NDVI), this project aims to extend driver reconstruction to 1991, validate biomass changes, and simulate fine-resolution biomass dynamics under forest loss and recovery globally.

Methodology and Objectives

This PhD proposal combines exascale computation, GPU acceleration, land surface modelling, and satellite Earth observation (EO) datasets to quantify the impacts of forest loss and recovery on above- and below-ground biomass (AGB and BGB) over the longest possible satellite-informed time series. The central modelling framework is the Joint UK Land Environment Simulator (JULES), advanced into an exascale-ready version (ExaJULES) to resolve biomass processes globally at 1 km resolution. ExaJULES will simulate carbon allocation, disturbance, and regrowth processes across AGB and BGB pools.

Satellite datasets provide the foundation for both driver attribution and biomass validation. The Global Drivers of Forest Loss dataset (2001–2022, Sims et al. 2024) classifies disturbances—including agriculture, logging, wildfire, infrastructure, and natural causes—at 1 km resolution. This will be combined with L-band Vegetation Optical Depth (L-VOD) for global AGB trajectories. Additional inputs such as Landsat and Sentinel-2 NDVI, MODIS and GFED fire records, FAO and ESA-CCI cropland expansion data, and ERA5-Land reanalysis will allow back-extrapolation of disturbance drivers to 1991. Handling these datasets is non-trivial: together they are estimated to require hundreds of terabytes of storage, and pre-processing steps (temporal aggregation, harmonisation of spatial resolutions, multiband image processing, and data cube construction) will at least double storage needs and incur substantial compute costs.

Computationally, the project exploits GPU-enabled exascale architectures to achieve kilometre-scale simulations with ExaJULES. Validation will involve cross-comparing satellite observations (L-VOD, GEDI canopy structure) with modelled biomass trajectories and, where possible, ground-based forest inventory data. Statistical attribution techniques (e.g., random forest classification, causal inference methods) will be used to disentangle the relative contributions of natural and anthropogenic drivers to observed biomass changes.

These approaches provide the foundation for two complementary teaser projects, each expandable into a full PhD pathway. One focuses on reconstructing disturbance drivers and validating biomass change, while the other advances exascale simulation of coupled AGB–BGB dynamics.

Teaser Project 1. Reconstructing Global Forest Loss Drivers and Validating Biomass Change

This project will extend knowledge of disturbance drivers beyond the satellite record by reconstructing global driver datasets from 1991–present. By integrating fire, agriculture, climate extremes, and governance proxies with the Sims et al. (2024) dataset (2001–2022), and using modern data pipeline techniques, it will deliver a continuous three-decade record of forest loss drivers.

The second objective is to validate AGB change. L-VOD (2002–present) and GEDI canopy structure will provide independent benchmarks for biomass loss and recovery. JULES simulations will be forced with observed disturbance regimes, and performance evaluated against EO-based benchmarks.

Key scientific questions include: How can driver attribution disentangle natural versus anthropogenic causes across continents? How does biomass allocation vary across regions dominated by different drivers (e.g., Amazon, Southeast Asia, boreal forests)? Can reconstructed driver datasets improve JULES/ExaJULES simulations of biomass dynamics? How do uncertainties in EO-based biomass propagate into carbon budget assessments?

This project provides a strong foundation for a PhD centred on historical reconstruction, EO–model fusion, and disturbance attribution.

Teaser Project 2. ExaJULES at 1 km – Simulating Above- and Below-ground Biomass with Exascale Computing

The second project develops and applies ExaJULES, a GPU-enabled exascale version of JULES, to simulate global biomass stocks and fluxes at 1 km resolution. The objective is to resolve how forest disturbance and recovery propagate from canopy to root-zone carbon pools.

Workflows will be developed for kilometre-scale global simulations, with opportunities to contribute to ExaJULES benchmarking and code development. Outputs will be benchmarked against L-VOD and ground inventory datasets. Particular emphasis will be placed on quantifying root–shoot allocation shifts under disturbance and recovery, which remain poorly represented in current models.

Key scientific questions include: How resilient is AGB–BGB coupling under diverse disturbance regimes (fire, drought, pests, land-use change)? What are the regional and global implications of biomass dynamics for the carbon cycle? How can new satellite missions (e.g., NISAR, BIOMASS) refine BGB representation? How does kilometre-scale modelling alter global carbon budget projections compared with coarse-scale runs?

This project naturally scales into a PhD centred on exascale modelling, GPU acceleration, and root–shoot dynamics, generating novel insights into biomass resilience and carbon–climate feedbacks.

References & Further Reading

- Curtis, P. G., Slay, C. M., Harris, N. L., Tyukavina, A., & Hansen, M. C. (2018). Classifying drivers of global forest loss. Science, 361(6407), 1108-1111.

- Sims, N.C. et al. (2024). Global drivers of forest loss at 1 km resolution, 2001–2022. Environmental Research Letters. DOI: 10.1088/1748-9326/add606

- Hansen, M. C., Potapov, P. V., Moore, R., Hancher, M., Turubanova, S. A., Tyukavina, A., … & Townshend, J. R. (2013). High-resolution global maps of 21st-century forest cover change. science, 342(6160), 850-853.

- Chen, Y., Feng, X., Fu, B., Ma, H., Zohner, C. M., Crowther, T. W., … & Wei, F. (2023). Maps with 1 km resolution reveal increases in above-and belowground forest biomass carbon pools in China over the past 20 years. Earth System Science Data, 15(2), 897-910.

- Mo, L., Zohner, C. M., Reich, P. B., Liang, J., De Miguel, S., Nabuurs, G. J., … & Ortiz-Malavasi, E. (2023). Integrated global assessment of the natural forest carbon potential. Nature, 624(7990), 92-101.

- ExaJULES model, https://excalibur.ac.uk/projects/exajules/

- Global Forest Watch: https://www.globalforestwatch.org.

- Zhang, Y., Ling, F., Wang, X., Foody, G.M., Boyd, D.S., Li, X., Du, Y. and Atkinson, P.M. (2021). Tracking small-scale tropical forest disturbances: fusing the Landsat and Sentinel-2 data record. Remote Sensing of Environment, 261, 112470.

-

Mechanisms for and predictions of occurrence of ocean rogue waves

Project institution:Project supervisor(s):Dr Suzana Ilic (Lancaster University), Prof Aneta Stefanovska (Lancaster University), Mr Michael Thomas (Reliable Insights) and Dr Bryan Michael Williams (Lancaster University)Overview and Background

Rogue waves, exceptionally high ocean waves, whose height exceeds twice the significant wave height, are rare, short-lived events that pose serious risks to shipping, fishing, and maritime infrastructure, including offshore platforms and wind turbines. Understanding their formation and improving prediction are essential for safe marine operations.

Despite advances in theoretical and experimental studies, the physical mechanisms driving rogue wave formation in real seas remain poorly understood, making prediction challenging. This PhD project aims to address these gaps by analysing extensive field data, developing advanced non-linear dynamic techniques, and utilising high-performance computing. The aim is to improve understanding of rogue wave dynamics and enhance forecasting capabilities, thereby contributing to the safety and resilience of marine operations.

[115]

Methodology and Objectives

The PhD project will address the following questions: How can data processing and modelling be accelerated to enable non-linear analysis and modelling with higher spatial and temporal resolution? Which of the sea state parameters predicted by existing operational wave models are useful for detecting the formation of rogue waves? How do the formation and predictability of rogue waves depend on physical conditions?

Teaser 1:

This data-intensive project aims to accelerate novel time-localised analysis methods to investigate physical mechanisms underlying rogue waves and predict their occurrence.

O1: Exploit GPU Accelerated Computing to parallelise algorithms for time-localised phase coherence and couplings between waves recorded in many spatial points, enabling scaling to higher-resolution and near real time analysis.

O2: Isolate the mechanisms leading to the formation of rogue waves using algorithms developed in O1.

O3: Develop in-situ feature detection for automated analyses exploiting GPU and assess the relationship between the occurrence of rogue waves and their characteristics from time-series measured under different physical conditions.

O4: Develop a time-series-based prediction modelling approach, using the relationships identified in O2-3 and assess its ability to predict the occurrence of rogue waves.

Methods:

The numerical modelling and algorithms for time-series analysis will exploit GPU Accelerated Computing; exascale will then allow near real-time practical applications. The Multiscale Oscillatory Dynamics Analysis (MODA) toolbox for non-linear and time-localised phenomena in time-series (e.g. phase coherence, coupling and wave energy exchange [3&4]) will be parallelised and used to identify rogue wave mechanisms. Automated pattern analysis and feature engineering will be applied using Tangent to detect anomalous sea surface elevations and enable an easily deployable computationally light forecasting solution using processed data. The methods will be first applied to laboratory data (e.g. [1]) and then to publicly available field measurements (e.g. Free Ocean Wave Dataset with more than 1.4 billion wave measurements). The newly developed prediction modelling approach will be systematically validated with measured data.

Teaser 2:

This is a data-intensive project focused on the computational optimisation of time series analyses for dynamic systems and the relationship between rogue wave properties and environmental conditions.

O1: Assess the current performance of the numerical tools included in MODA and Tangent in terms of their relevance for detecting the mechanisms of rogue waves and their computational efficiency.

O2: Optimise the algorithms of the tools identified in O1 with multiple GPU to improve time to improve computation time and experimental throughput, enabling large-scale ensemble time-series analyses.

O3: Develop and apply a GPU version of MODA to field measured data to isolate mechanisms that lead to the formation of rogue waves.

O4: Assess the relationship between the occurrence of rogue waves and concurrent ocean and atmospheric data.

Methods:

The Multiscale Oscillatory Dynamics Analysis (MODA) toolbox offers several high-order methods for time-series analysis, some based on wavelets. The high computational demands of uncertainty evaluation methods limits their use for operational purposes. Optimised algorithms, GPU-acceleration and Exascale facilities will enable higher resolution and practical applications. MODA will identify the mechanisms underlying rogue wave formation using field measured time-series of surface elevations (e.g. Free Ocean Wave Dataset). The concurrent environmental data (e.g. surface ocean currents, wind and atmospheric pressure) will be collated either from field measurements or from the operational forecast models provided by meteorological offices. The correlation between the occurrence of rogue waves and environmental parameters, as well as ‘causal’ relationships between the identified mechanisms and the environmental conditions, will be investigated using the Tangent modelling engine which can be incorporated into predictions in the future.

[577]

References & Further Reading

- Luxmoore, J.F., Ilic, S. and Mori, N., 2019. On kurtosis and extreme waves in crossing directional seas: a laboratory experiment. Journal of Fluid Mechanics, 876, pp.792-817.

- Mori N., Waseda, T., Chabchoub A.(eds.) (2023) Science and Engineering of Freak Waves, Elsevier (https://doi.org/10.1016/C2021-0-01205-0).

- Newman, J., Pidde, A. and Stefanovska, A., 2021. Defining the wavelet bispectrum. Applied and Computational Harmonic Analysis, 51, pp.171-224.

- Stankovski, T., Pereira, T., McClintock, P.V. and Stefanovska, A., 2017. Coupling functions: universal insights into dynamical interaction mechanisms. Reviews of Modern Physics, 89(4), p.045001.

- Yang X., Rahmani H., Black S., Williams B. M. Weakly supervised co-training with swapping assignments for semantic segmentation. In European Conference on Computer Vision 2025 (pp. 459-478). Springer, Cham.

- Jiang Z., Rahmani H., Black S., Williams B. M. A probabilistic attention model with occlusion-aware texture regression for 3D hand reconstruction from a single RGB image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2023 (pp. 758-767).

- Jiang Z., Rahmani H., Angelov P., Black S., Williams B. M. Graph-context attention networks for size-varied deep graph matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022 (pp. 2343-2352).

-

Mixed-precision multigrid for weather and climate applications

Project institution:Project supervisor(s):Prof Michèle Weiland (University of Edinburgh), Dr Eike Mueller (University of Bath) and Dr Thomas Melvin (Met Office)Overview and Background

Modern hardware (primarily GPUs) is evolving to make extensive use of floating–point precisions lower than 64-bit (historically the norm for simulation algorithms). This is partly because Machine Learning remains efficient at lower precision, but also because lower precision computational units require reduced silicon area. Lower precision can deliver improved performance through better utilisation of vector units coupled with lower demand on memory and network bandwidth. This project will investigate applying low precision computation to weather and climate simulation codes, such as the Met Office’s new forecasting model LFRic. The focus will be on exploring performance gains to the multigrid solver through mixed precision approaches.

Methodology and Objectives

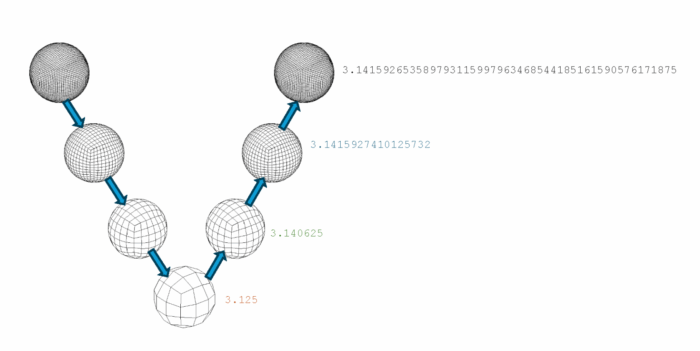

Codes such as the Met Office’s next generation numerical weather prediction application LFRic already use a geometric multigrid preconditioner in the linear solver (to improve convergence and reduce the number of communication calls between processing units) and mixed precision, where the linear solver and transport scheme are routinely run as 32-bit bubbles within the code and it is planned that the model will run fully at 32 bit, but do not yet take advantage of the smallest precisions (e.g. 16-bit, 8-bit and 4-bit). Geometric multigrid utilises successively coarser meshes, allowing error modes to be selectively resolved by scale. The student will investigate approaches to doing this with a combination of mesh coarsening/refinement and precision coarsening/refinement. The student would be expected to devise approaches for this, initially with simplified model setups (e.g. shallow-water equations) and evaluate them from both computational and accuracy perspectives before ultimately considering how this should be approached in LFRic.

Methodology: The student will develop mixed and low precision multigrid implementations and perform rigorous evaluation of both the performance benefits, in particular focusing on Exascale hardware architectures.

Teaser Project 1: Numerical implications of mixed/low precision multigrid

Reducing the floating-point precision in computation has implications on numerical accuracy. However for multigrid, with its coarsening and refining steps, this reduction in accuracy during the coarsening steps of the algorithm may be acceptable. The student will investigate, using a simple 2D problem, what the impact of lowering precision has on the overall numerical stability and quality of the computation, and how far the low precision can be pushed before the numerics break down. The objectives of Teaser Project 1 are to evaluate how far numerical precision can be lowered safely in the context of multi-grid (looking at both geometric and precision-based refinement) before there is a trade-off with the quality of the results.

Teaser Project 2: Performance impact of mixed/low precision multi-grid

Modern GPUs can accelerate lower precision floating-point operations in hardware, though the exact performance impact is hardware specific and problem dependent. In addition, the use of lower precision floating-point numbers should reduce demands on the memory subsystem and improve the utilisation of vector units. The student will start by using iterative refinement in the current setup (a Richardson iteration preconditioned with a multigrid V-cycle), evaluate the performance improvements that can be achieved through the gradual lowering of floating-point precision, and quantify these improvements (e.g. runtime and memory bandwidth) as a fraction of the theoretical peak. The objectives of Teaser Project 2 are to define the upper and lower bounds of the performance improvements that can be expected from mixing levels of precision, from 64-bit down to 8 or even 4-bit.

-

Modelling threatened biodiversity at national, continental and planetary scales

Project institution:Project supervisor(s):Dr Vinny Davies (University of Glasgow), Dr Mark Bull (University of Edinburgh), Prof Richard Reeve (University of Glasgow), Prof Christina Cobbold (University of Glasgow) and Dr Neil Brummitt (Natural History Museum)Overview and Background

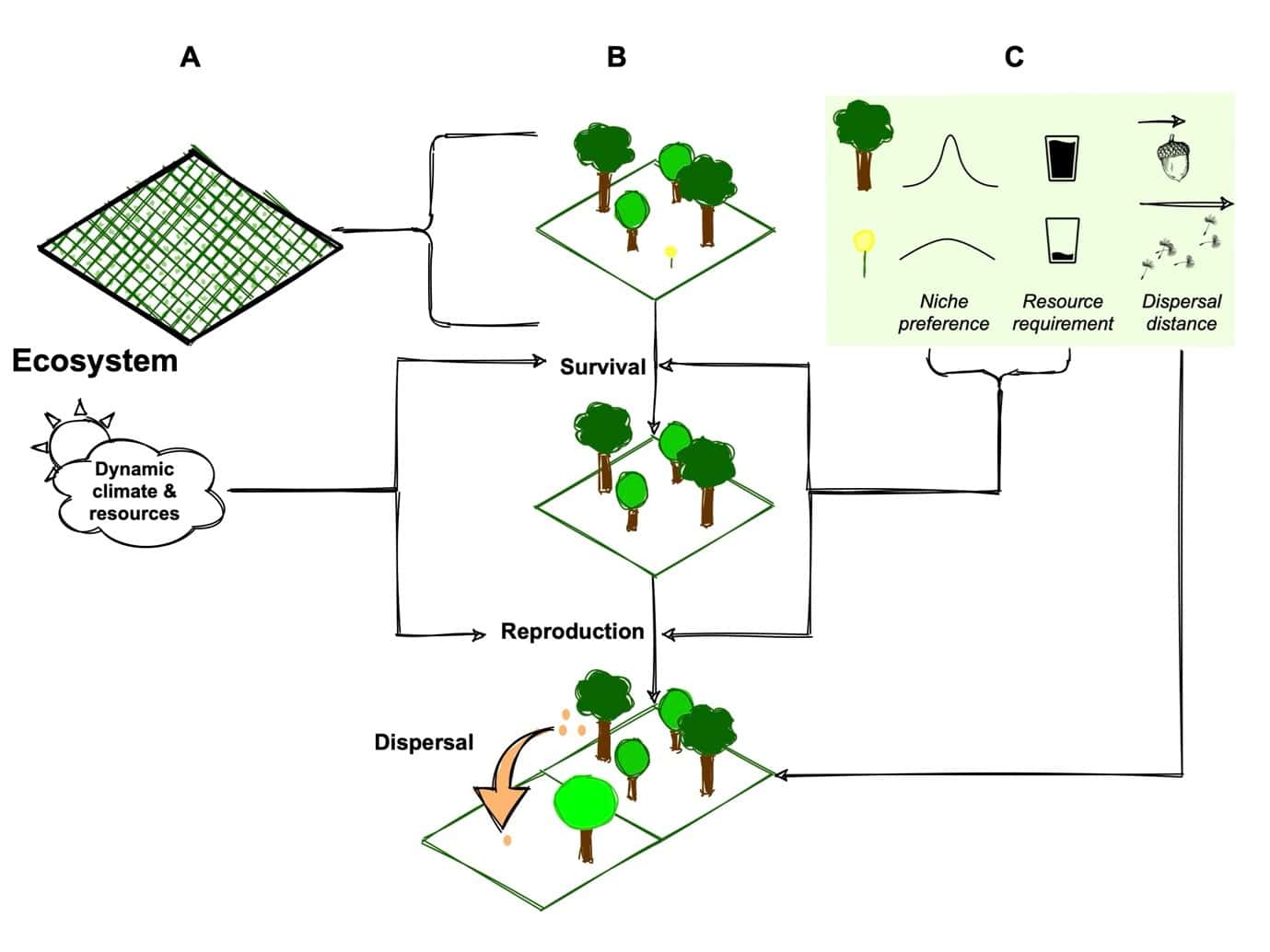

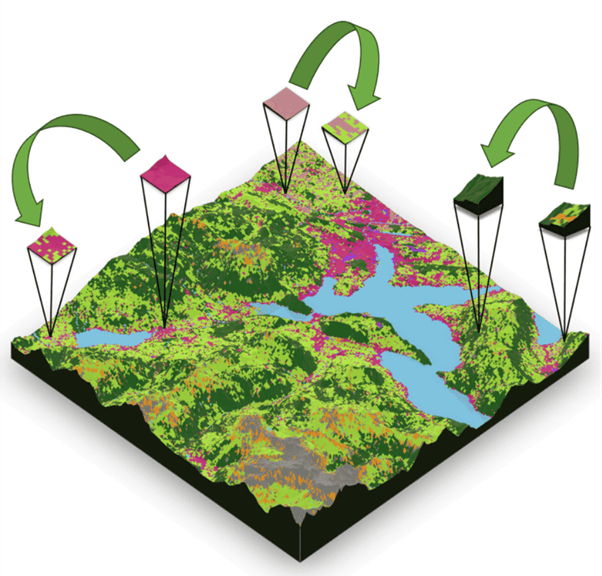

Understanding the stability of ecosystems and how they are impacted by climate and land use change can allow us to identify sites where biodiversity loss will occur and help to direct policymakers in mitigation efforts. Our current digital twin of plant biodiversity – https://github.com/EcoJulia/EcoSISTEM.jl – provides functionality for simulating species through processes of competition, reproduction, dispersal and death, as well as environmental changes in climate and habitat, but it would benefit from enhancement in several areas. This project would target improving the speed of model runs and the feasible scale of model simulations to allow stochastic modelling to quantify uncertainty, to develop techniques for scalable inference of missing parameters, and to allow more complex models. It would do this through two approaches – on the one hand porting the code to run on GPUs for higher computational efficiency, and on the other hand applying techniques such as mesh refinement and partitioning to allow models to run at high resolution only where required by the ecosystem complexity.

Methodology & Objectives

Teaser Project 1 Objectives: GPU: Port core EcoSISTEM code to GPU

This project will analyse the core CPU routines in EcoSISTEM, and port them to GPU. This will use packages from the JuliaGPU ecosystem, that provide a relatively easy user interface to NVIDIA and AMD GPUs, which are available on EPCC’s HPC system that the student will have access to. The main branch of the EcoSISTEM code is already efficiently parallelised for CPUs, and a preliminary assessment has suggested that the porting task should be feasible within a teaser project. This teaser project can be extended in a variety of ways to a full PhD:

On the one hand, once the GPU port speed-ups have been realised, the student can add major new components to EcoSISTEM. For instance, the student can investigate uncertainty quantification and parameter inference techniques within EcoSISTEM.

On the other hand, there is a more sophisticated (dev) development branch of EcoSISTEM that is not currently well optimised but allows greater flexibility of how interactions can occur between components of the model. Porting this to GPUs will be a significantly harder task, but will allow richer interactions to be more easily modelled between ecosystem components.

Teaser Project 2 Objectives: Scalability improvements: mesh refinement and partitioning

Different types of ecosystem require different scales of spatial resolution to model them adequately. For example, hedgerow and rivers are linear features that may be very species dense compared to the surrounding terrain. Currently EcoSISTEM uses a uniform mesh size, which means that the whole model has the same spatial resolution. To be able to increase the fidelity of the model in a scalable way, it must increase the spatial resolution only where needed. This teaser project would begin by capturing the requirements for non-uniform meshing and prototype an implementation, possibly in a simple proxy code.

With a non-uniform mesh, scaling to many thousands of processes require load balancing techniques, either static or dynamic, depending on the use case. The teaser project will investigate the suitability of existing mesh partitioners and adaptive meshing techniques, and how they can interface with the existing Julia code.

References & Further Reading

-

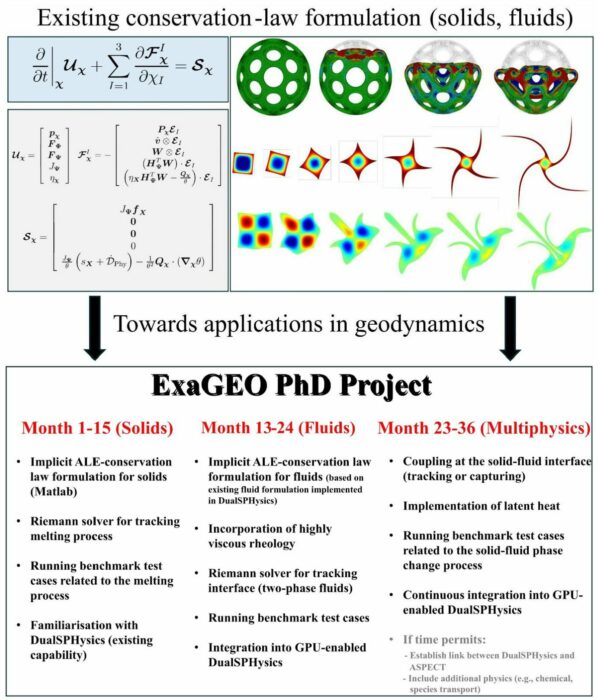

Multi-scale modelling of volcanoes and their deep magmatic roots: Constitutive model development using data-driven methods

Project institution:Project supervisor(s):Dr Ankush Aggarwal (University of Glasgow), Dr Tobias Keller (University of Glasgow) and Prof Andrew McBride (University of Glasgow)Overview and Background

This PhD studentship focuses on developing GPU-accelerated models of magmatic processes that underpin volcanic hazards and magmatic resource formation. These processes span sub-millimetre mineral-fluid-melt interactions up to kilometre-scale magma dynamics and crustal deformation. Magma is a multi-phase mixture of solids, silicate melts, and volatile-rich fluids, interacting in complex thermo-chemical-mechanical ways.

This is a standalone PhD project that is part of a larger framework of magmatic systems research by the wider team. The project will contribute one component of a hierarchical, multi-scale modelling framework using advanced GPU-based techniques. Specifically, in this project, the PhD student will develop constitutive relationships between stresses and strains/strain-rates of various phases at the magmatic system-scale based on granular-scale mechanical simulations (available through an existing collaboration). The result will enable accurate, large-scale simulations of magma dynamics that capture the complexity of micro-scale constituents and their interactions.

Your work will include software development, integrating and interpreting field and experimental data sets, attending regular seminars, collaborating within the wider research team, and receiving training through ExaGEO workshops.

Volcanic eruptions originate from shallow crustal magma reservoirs built up over long periods. As magma cools and crystallizes, it releases fluid phases—aqueous, briny, or containing carbonates, metal oxides, or sulfides—whose low viscosity and density contrasts drive fluid segregation. This fluid migration can trigger volcanic unrest or concentrate metals into economically valuable deposits. The distribution of fluids—discrete droplets versus interconnected drainage networks—crucially depends on crystal and melt properties. Direct observations are challenging, so high-resolution, GPU-accelerated simulations provide a way to understand these complex and dynamic systems.

Methodology and Objectives

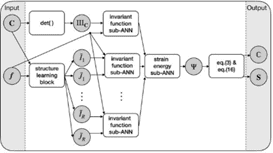

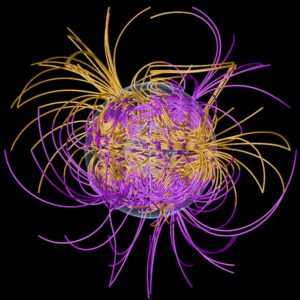

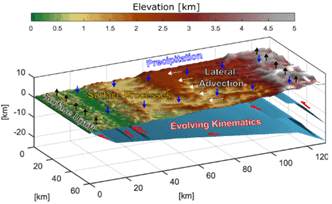

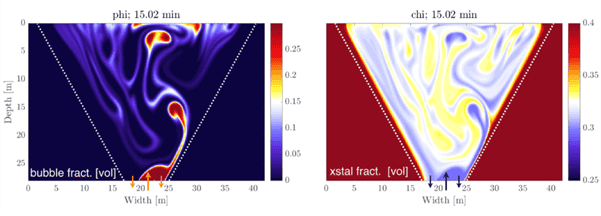

A Gaussian-process-based simulation result

A neural-network-based constitutive modelling framework Modelling volcanic systems is challenging due to the multi-scale nature of their underlying physical and chemical processes. System-scale dynamics (100 m to 100 km) emerge from interactions involving crystals, melt films, and fluid droplets or channels on micro- to centimetre scales. To link these scales, this project uses a hierarchical approach: (i) direct numerical simulations of granular-scale phase interactions, (ii) deep learning-based computational homogenisation to extract effective constitutive relations, and (iii) system-scale mixture continuum models applying these relations to problems. All components leverage GPU-accelerated computing and deep learning to handle direct simulations at local scales, train effective constitutive models, and achieve sufficient resolution at the system scale.

In this project the candidate will extract effective constitutive relations by computationally homogenising the micro-scale mechanical simulations (available through an existing collaboration). The effective constitutive properties will then be used in the macro-scale models to accurately capture the multi-scale effects. The project will leverage recent advances in the use of neural networks [1,2] and Gaussian processes [3,4] for constitutive model development. A range of micro-scale simulation results have already been generated to produce the data covering the different deformation regimes. These results will be used to train a deep-learning-based constitutive model. Approaches based on neural networks and Gaussian processes will be explored and compared. The trained model will then be used in macro-scale simulations, and its results will be compared to those using the constitutive relations currently assumed in the literature. Lastly, the variability resulting from this homogenisation process will be quantified and its propagation into macro-scale simulations will be assessed to ensure confidence in the results. The focus of model applications will be the proposed regime transition from disconnected bubble migration to interconnected channelised seepage of fluids from crystallising magma bodies [5].

Within this project, the student will start by working on two “teaser” sub-projects to gain familiarity with different techniques and data, then choose how to further develop and focus their research.

Teaser Project 1 Objectives: This sub-project, conducted over the first year, will focus on neural networks for constitutive modelling. Neural networks (NN) are the most popular choice of deep learning. Recent works have used NNs for constitutive model development, identification, and discovery [1,2]. With large flexibility in modelling wide-ranging phenomenon, NNs also bring a large number of tunable parameters (weights), associated uncertainty and requirement of large training dataset. This teaser project will explore NNs’ use for constitutive model development based on a simplified one- and two-phase micro-scale systems. This will include finding suitable architecture and training hyperparameters, and the required training dataset. A GPU-based implementation will be developed to make the training of high-dimensional neural networks feasible. This teaser project will pave the path towards a neural-network-based approach for the overall project over the next three years, wherein the initial implementation will be extended to complex micro-scale simulations modelling four phases. Additionally, in the full project, the uncertainty related to neural networks will be quantified, and the required training data will be optimised. These additions will further increase the computational cost, thus necessitating a GPU-accelerated framework.

Teaser Project 2 Objectives: This sub-project, conducted over the first year, will focus on Gaussian process for constitutive modelling. Gaussian processes (GPs) are rigorous statistical tools that are an attractive alternative to neural networks [3]. The main advantage of GPs is that, in addition to the mean, they also capture the variation/confidence in the results, which can in-turn inform which micro-scale simulations must be run to improve their accuracy. Recently, these have been used for constitutive model development for hyperelastic solids [4]. This teaser project will explore GPs’ for modelling the effective constitutive relationships of simplified one- and two-phase micro-scale systems and using the results to also select the required micro-scale simulations. Thermodynamic constraints on constitutive model will be added by extending the GP framework [4], which will increase the training cost. Thus, a GPU-based implementation will be required to make the computation feasible. If this approach is selected for the rest of the PhD, it will be extended to the fully-complex micro-scale model over the next three years. Moreover, the GP approach will be leveraged to develop a robust framework for design of experiments, such that there is a high confidence in the resulting constitutive properties. The design of experiment brings an exponentially high computational cost, thus necessitating a GPU-accelerated framework.

References & Further Reading

[1] Linka, K., Hillgärtner, M., Abdolazizi, K. P., Aydin, R. C., Itskov, M., & Cyron, C. J. (2021). Constitutive artificial neural networks: A fast and general approach to predictive data-driven constitutive modeling by deep learning. Journal of Computational Physics, 429, 110010.

[2] Liu, X., Tian, S., Tao, F., & Yu, W. (2021). A review of artificial neural networks in the constitutive modeling of composite materials. Composites Part B: Engineering, 224, 109152.

[3] Williams, C. K., & Rasmussen, C. E. (2006). Gaussian processes for machine learning (Vol. 2, No. 3, p. 4). Cambridge, MA: MIT press.

[4] Aggarwal, A., Jensen, B. S., Pant, S., & Lee, C. H. (2023). Strain energy density as a Gaussian process and its utilization in stochastic finite element analysis: Application to planar soft tissues. Computer methods in applied mechanics and engineering, 404, 115812.

[5] Degruyter, W., Parmigiani, A., Huber, C. and Bachmann, O., 2019. How do volatiles escape their shallow magmatic hearth?. Philosophical Transactions of the Royal Society A, 377(2139), p.20180017.

-

Near-real-time monitoring of supraglacial lake drainage events across the Greenland Ice Sheet

Project institution:Project supervisor(s):Dr Katie Miles (Lancaster University), Dr Henry Moss (Lancaster University), Prof Philipp Otto (University of Glasgow) and Dr Amber Leeson (Lancaster University)Overview and Background

The drainage of supraglacial lakes plays an important role in modulating ice velocity, and thus the mass balance, of the Greenland Ice Sheet. To date, research has primarily focused on drainage events and their impacts on ice motion during the summer melt season, but recent research has shown that drainage events during winter can also affect ice dynamics. However, current approaches are limited to a single season and/or 1–2 satellite sensors, limiting observations when year-round and multi-sensor monitoring are required to fully understand lake processes and their impacts. This PhD will utilise petabytes of available Earth Observation data and exascale computing to perform ice-sheet–scale analysis of year-round supraglacial lake drainage events on the Greenland Ice Sheet and produce scalable workflows that can be used to assess the impact of lake drainage events in other glaciological environments.

Methodology and Objectives

This PhD project will advance capabilities in the detection of supraglacial lake drainage events on the Greenland Ice Sheet (GrIS) and assess the impact of these drainage events on ice dynamics. The PhD will commence with two exploratory projects (~6 months each), providing complementary experience in deploying exascale compute and performing big-data analysis.

Teaser Project 1: Near-real-time, automated, multi-sensor, year-round monitoring of supraglacial lake drainage

Current assessments of supraglacial lake drainage on the GrIS are largely restricted to a single season and/or satellite sensor, particularly during winter, where existing approaches remain constrained to low-volume pipelines that analyse single orbits and provide limited temporal and spatial coverage. However, the plethora of remotely sensed imagery now available provides the opportunity to detect supraglacial lake drainage events at high temporal and spatial resolution across the entire ice sheet in near real-time through exascale big-data analysis. This project will scale a SAR-based methodology for supraglacial lake drainage detection to ice-sheet-wide monitoring, using a high-data-volume approach to achieve near-daily temporal resolution by leveraging all available orbits and both C- and L-band SAR. The project will exploit access to exascale compute to deploy GPU-accelerated machine learning methods (e.g., convnets or Unets), able to extract spatiotemporal patterns from large and complex volumes of multi-frequency inputs, to support robust, scalable detection of drainage events across diverse glaciological settings. Validation and training will draw on timestamped ArcticDEM strips and ICESat-2 altimetry, ensuring reliable accuracy assessments at ice-sheet scale, using methods for (cross-) validation across space and time (e.g., Otto et al. 2024).

Teaser Project 2: Near-real-time evaluation of the impact of supraglacial lake drainage on the GrIS

As supraglacial lakes form increasingly farther inland under increasing atmospheric temperatures, the year-round impact of their drainage events on ice dynamics is not yet well understood. Recent research has shown that winter drainage events are numerous, often occur as “cascade events”, and can result in short-term increases in ice velocity (Dean et al., under review). However, a systematic, year-round analysis of the impact of supraglacial lake drainage events on ice dynamics across the GrIS has not yet been undertaken. This project will set up a pipeline on a sub-region of the ice sheet to analyse the impact of drainage events on ice dynamics in real-time, allowing later scaling up to an ice-sheet-scale. Access to exascale compute will enable comparison of the database of drainage events created for Project 1 with climate and glaciological data (e.g., temperature, precipitation, surface energy balance, ice surface velocity, ice thickness, and bed elevation). By applying scalable statistical methods like changepoint analysis (offline detection), statistical process monitoring (online surveillance), and anomaly detection using deep learning methods, the impact of lake drainage events will be evaluated in real-time and assessed over a range of timescales. Additionally, the trained DNNs from Project 1 can be used for dimensionality reduction and process monitoring based on data depths, which can indicate further sources/reasons for detected changes (Malinovskaya et al. 2024).

Long-term pathway and objectives

At the end of Year 1, the student will select a pathway for further development. If Project 1 is chosen, the PhD will focus on leveraging additional compute resources and machine learning methods to scale the analysis to include additional, larger, and multi-modal data sources in our drainage detection methodology (such as optical to enhance summer detection) and deploying our method over other regions, such as Antarctic ice shelves, where real-time monitoring of supraglacial lake drainage events and cascades could be a useful precursor for forecasting ice-shelf disintegration (Banwell et al., 2013). If Project 2 is chosen, the PhD will focus on exploiting access to exascale compute and machine learning methods to scale the analysis to an ice-sheet-wide scale, enabling near-real-time assessment of the impact of supraglacial lake drainage events on the GrIS and potentially other glaciological environments.

For either pathway, the aims of the PhD are to:

- Advance understanding of year-round supraglacial lake drainage events on the Greenland Ice Sheet.

- Exploit exascale compute resources and machine learning to enable real-time detection of supraglacial lake drainage events at an unprecedented scale and assess their impacts.

- Produce scalable workflows that can be applied to supraglacial lake drainage events in other glaciological regions.

References & Further Reading

Banwell, A. et al., (2013), Breakup of the Larsen B Ice Shelf triggered by chain reaction drainage of supraglacial lakes, Geophysical Research Letters, 40, 22, 5872-5876, doi.org/10.1002/2013GL057694

Christoffersen, P. et al., (2018), Cascading lake drainage on the Greenland Ice Sheet triggered by tensile shock and fracture, Nature Communications, 9, 1064, doi.org/10.1038/s41467-018-03420-8

Dean, C. et al. (under review), A decade of winter supraglacial lake drainage across Northeast Greenland using C-band SAR, The Cryosphere Discussions

Dunmire, D. et al., (2025), Greenland Ice Sheet wide supraglacial lake evolution and dynamics: insights from the 2018 and 2019 melt seasons, Earth and Space Science, 12, 2, doi.org/10.1029/2024EA003793

Leeson, A. et al., (2015), Supraglacial lakes on the Greenland ice sheet advance inland under warming climate, Nature Climate Change, 5, 51–55, doi.org/10.1038/nclimate2463

Malinovskaya, A., Mozharovskyi, P., & Otto, P. (2024). Statistical process monitoring of artificial neural networks. Technometrics, 66(1), 104-117, doi.org/10.1080/00401706.2023.2239886

Miles, K., et al., (2017), Toward monitoring surface and subsurface lakes on the Greenland Ice Sheet using Sentinel-1 SAR and Landsat-8 OLI imagery, Frontiers in Earth Science, 5, doi.org/10.3389/feart.2017.00058

Otto, P., Fassò, A., & Maranzano, P. (2024). A review of regularised estimation methods and cross-validation in spatiotemporal statistics. Statistic Surveys, 18, 299-340, doi.org/10.1214/24-SS150

-

Scalable Deep Learning for Biodiversity Monitoring under Real-World Constraints

Project institution:Project supervisor(s):Dr Tiffany Vlaar (University of Glasgow), Prof Colin Torney (University of Glasgow), Prof Rachel McCrea (Lancaster University), Dr Thomas Morrison (University of Glasgow) and Dr Paul Eizenhöfer (University of Glasgow)Overview and Background

Technological advances have ushered in the era of big data in ecology (McCrea et al., 2023). Usage of

deep learning and GPUs shows promise for more effective biodiversity monitoring which is vital to

monitor and mitigate effects of climate change. However, many open questions remain on the biases and behaviour of deep neural networks under real-world constraints – such as unbalanced data and uncertain labels. There is a pressing need for better benchmarks to train, test, and understand these models. Further, Kaplan et al., 2020, found that various deep neural networks’ performance improves with model and data size. Training large models on vast ecological datasets requires endless GPU hours and reliable performance will greatly benefit from the potential of exascale computing.Methodology and Objectives

Project 1: Scalable Biodiversity Monitoring with Deep Learning by Understanding What Data Matters